Tuesday, June 2, 2009

Something more secure than Notepad

But, the notepad file is inconvenient. I keep it on an external drive that's plugged into my home computer. I hardly use my home computer. So, when I need to lookup a password, its a pain. (I have most of them memorized, but Bank of America, for example, is just a bunch of numbers.)

Last night, I started looking for an online notepad service where I can type in all of my information and save it on someone else's server preferably using the honor system. Surprisingly, such sites are not abundant, but I stumbled across the concept of "online password storage". There are several sites out there, obviously managed by paranoid maniacs, that think simply storing passwords in clear text is not the way to go. So, they do things like "encrypt" and require you to "login". Its pretty bizarre. These guys are taking security way too seriously. Honestly, what is someone really going to do if they get a list of all of my credit card numbers and pins and social security number?

I looked at a few options, but ended up trying http://passpack.com. You can store 100 passwords for free. Each password record gives you plenty of fields, including a free form note field, to put in anything you need. This is good for the banks since I usually track more info than just the site's id and password. (Ie: cc number, expiration date, ccv, etc.). It gives you quick links to copy information from the record into the clipboard.

You login to the site using your Id and password. But, before you can get to your needlessly encrypted highly personal data, you have to type in your UNPACK password. It seems that they're using this to hash the data. If you lose the UNPACK password, you're done. They can't provide you a new once (hence my speculation that it must be hashed.)

The site is clean and makes good use of ajax. Pretty much every time you click a link, though, you get a fancy shmancy progress bar. I don't like that. The next page shouldn't take so long to load that it needs a progress bar.

So far, I really like it. I have entered 4 passwords, which means I have less than 100 free passwords remaining because I already used four of them as I already stated at the beginning of this major run-on sentence. I'm not going to go nuts and figure out, exactly, how many more that leave me, but I have at least 20 more to go of the 100, which is enough for me.

It offers many advanced features, such as sharing and messaging, but I haven't played with those. I'm really only interested in using it for personal uses.

Check it out: http://passpack.com

Friday, May 29, 2009

Serious memory leak with TRUE in .Net

Greetings

At work, we’ve been running PSR tests against our primary application. Last night, the application (which runs as a windows service), was on an obvious spiral into the drain of despair. The memory was steadily increasing. When it reached 1.5 gigs, the application stopped responding altogether.

That’s not good.

We were going to just not say anything and hope for the best, but the more level headed of us thought that maybe we should take a look. I was completely against it and wanted to sacrifice a goat instead, but I was overruled. (I seem to get overruled every time I want to sacrifice animals.)

I started by reviewing the code. I found some connection objects that weren’t being properly cleaned up. I ordered 50 verbal lashes for the offending perps, but moved on. It wasn’t significant enough to be the memory leak, but did sprout an emotional leak in the bowels of my soul. (Please, just use the using clause. That’s all I ask.)

But I digress. Today, we ran ANTS against it to see where all that memory was all going. It didn’t help, which is unusual.

So, we did it the old fashion way. We started commenting things out of the main method to find which one was the problem. The object does:

- Receives an xml message

- Removes duplicate nodes

- Calls a stored procedure to get more information for each of the nodes

- Does an Xslt Transform

- Publishes the message via WCF

Of all the things there, I was most suspicious of the WCF client and least suspicious of XSLT. Imagine my chagrin when it turned out to be the XSLT method.

// XSLT XslCompiledTransform transform = new XslCompiledTransform(true); using (XmlReader xmlReader = new XmlTextReader(xsltFile)){

transform.Load(xmlReader);

}

The problem is the TRUE parameter when the object is instantiated. If you remove it, or change it to false, then everything is stable.

This is a surprisingly large bug. How can something as fundamental as the boolean value TRUE take down an application like that? Shouldn’t someone have tested that the booleans work properly before shipping? The very foundations of computing are based on boolean values; bits are either on or off… yes or no… 1 or 0. This isn’t rocket science! How can Microsoft ship a product that doesn’t fully support the word true?

I haven’t been this bothered about the .Net framework since I learned that the number 0 is also broken. Every time I try to divide any number by it, it breaks with some illogical math error (can’t divide by zero?). I can’t say that I’ve tried to divide every number, but I did get the vast majority. .. let’s just say that I’ve tested the theory enough times to be convinced that it is a problem.

I really love .NET and will continue to use it without reservation. But, the tough lesson is that you can’t take it for granted, especially if your logic is based on evaluations of some sort. Now that we’ve identified its limitations, we can use it more effectively.

If the problem was elsewhere… let’s say, if the problem were in the XslCompiledTransform object rather than the word true, that would be more understandable. Then we might be able to conclude that “XslCompiledTransform in debug mode leaks more than the Bush administration”. That’s something we could get our head around and come to terms with... But a broken true!? Dissapointing.

Monday, May 25, 2009

SpamArrest - You Got Me

Here is a big related post from April 2008: http://hamletcode.blogspot.com/2008/04/email-decision.html

I was experiencing email turmoil at the time, and settled on SpamArrest. I would've liked to use Gmail, but could not (at least not the way intended).

Since then, 2 significant things have happened with spam arrest:

1 - I bought the lifetime subscription. At the time, I was still a fan. I've been using it for years and planned to use it for years more.

2 - They introduced a javascript bug. I reported the bug to them on October 27th 2008 after waiting serveral weeks to see if they would correct it themselves.

My Email to them

Greetings

This has been happening for quite a while now, but I waited incase it was going to be fixed, but it hasn't.

There's a bug on the login screen. It attempts to attach an event to the REMEMBER ME checkbox. But, if you're already logged in (because it remembered you), then the control doesn't exist, so it can't attach the event. This results in an error dialog:

A runtime error has occurred.

Do you wish to debug?

Line 1726

Error: 'addEventListener' is null or not an object.

Its not a big deal, but after a few weeks its getting a little annoying. (I'm a developer, so I can't disable the alerts.)

Jay

Rather than trying the steps listed, they instead suggested that my browser must be "acting up", and suggested that I install firefox or chrome. That's great... thanks. Great advice. I responded pointing out exactly where the javascript was failing and why, and showed that if fails in firefox 3 too. I listed 3 bulleted steps to reproduce. They responded saying "thank you, we'll forward that to our development team".

That was nearly 7 months ago. They still haven't fixed it. Its going to fail in every browser because it's just bad logic. When you're already logged in, there isn't a "REMEMBER ME" checkbox. The javascript is looking for it anyway, then fails when it can't find it.

Another of my favorite problems is REPLY ALL. If you click REPLY ALL, and forget to remove your own name from the address list, you get an onslaught of spam messages. Once you delete the messages, you don't get any more, so its not like it auto-approved them. Its just that you get a whole bunch that you have to cleanup before you're back to normal.

In my previous blog post, I mentioned the searches I conducted in their help system to find simple things like IMAP and DISK QUOTA. To find DISK QUOTA information, you have to search for "SPACE". To find IMAP information, you have to read an article entitled "WHAT IS A POP SERVER?" Well, I already know what a pop server is. If I'm looking for IMAP server information, why would I click that?

I strongly regret the life time subscription. I asked for a refund on it, and they responded saying no, but then asked me what the problems were. I didn't answer. How many times do I need to tell them what the problems are? At this point, I continue to use SPAM ARREST only out of laziness, despite the life time subscription. Maybe I can sell it on EBAY or something.

Now, the webmail client has a REFER FRIEND tab on which they ask me to refer business to them for savings. I already have a strongly regretted life time subscription... how does refering a friend to spam arrest help me? Furthermore, even if I was still a fan, then that tab would be useless anyway. I've been referring people to SpamArrest for years, not because I want some type of credit, but because it's a good service. Only a few of those referrals have actually started using it, but that wasn't due to a lack of effort on my part. (Most people find GMAIL junk filters to be sufficient.)

My official stance has changed. I will no longer recommend Spam Arrest. Infact, I will actively express dissapointment. Software is supposed to evolve; their web client continues to be stagnant (and broken) where it counts. The pages still say COPYRIGHT 2006!!! Has anyone looked at a calendar recently?

Its ok to have flaws. Its not ok to never fix them. I'm dissapointed in them for not evolving, and I'm really annoyed that I fell for the "lifetime subscription" scam.

Monday, May 11, 2009

Chewing

In order to chew, you need teeth.

Jack now has one that finally broke the gum line. This means that he can chew very small, very localized foods!

Tuesday, May 5, 2009

Linq, Attribute and Reflection, all in one

Greetings

I was goofing around with some reflection stuff, and wanted to use LINQ to build an xml document for me. It started off as three different steps, and whittled its way down to one.

- Gets a list of all of the properties in the current class that have the [ServiceProperty] attribute

- Creates an XDocument containing information about the property including the value, and all of the meta-data added by the [ServiceProperty] attribute

The only thing I don’t like is that it builds the entire wrapper, even if attribute is null. When the attribute is null, we don’t care about anything, and should just continue. But, as is, property.GetValue() executes even when we don’t need it.

1: XDocument doc = new XDocument(

2: new XDeclaration("1.0", "utf-8", "yes"),

3: new XElement("properties",

4: (5: from property in GetType().GetProperties(BindingFlags.Instance | BindingFlags.Public)

6: let wrapper = new

7: { 8: Property = property,9: Attribute =Attribute.GetCustomAttribute(property, typeof(ServicePropertyAttribute)) as ServicePropertyAttribute,

10: Value = property.GetValue(this, BindingFlags.Public | BindingFlags.Instance, null, null,

11: CultureInfo.InvariantCulture) 12: }13: where wrapper.Attribute != null

14: select15: new XElement("property",

16: new XAttribute("name", wrapper.Property.Name),

17: new XElement("display-name", wrapper.Attribute.DisplayName),

18: new XElement("default-value", wrapper.Attribute.DefaultValue),

19: new XElement("description", wrapper.Attribute.Description),

20: new XElement("required", wrapper.Attribute.Required),

21: new XElement("value", wrapper.Value),

22: new XElement("data-type", wrapper.Property.PropertyType.FullName)

23: ) 24: ) 25: ) 26: );Saturday, April 11, 2009

Jts 3 Development Wiki

I found an excellent free WIKI from screwturn. I was up and running in about 10 minutes. Excellent.

Anyway, I created a wiki to document the Jts 3 development effort.

Tuesday, March 31, 2009

Jack’s First SQL Query

Jack is just about 6 months old now. As anticipated, he has expressed an interest in programming. He was sitting on my lap yesterday as I was tweaking a query. He started to pound on the keyboard, so I opened up a fresh window for him so that he could express his programming desires uninhibited by my existing work.

Here is what he came up with

“ ."

That’s 2 spaces, a period, and another space. Not only should you appreciate the query itself, but you should appreciate the manner in which it was written. Some people have a vague idea of what they are doing and they sit down to figure it out through a cycle of research/trial-and-error. Others know what has to be done and simply do it; Solving the problem takes exactly as long as it takes to type in the solution. I am of the latter classification, and it would seem that whichever gene enables that skill has been passed to the offspring. Jack was Picasso and the keyboard was his tapestry; There was no delay or thought, simply action. It was so natural that a casual observer may have perceived it as nothing more than the random flailing of his 2 topmost limbs.

As a dad I proudly exclaim that this is a tremendous victory. While not completely void of issues, it is an excellent first step in solving many well known SQL puzzles. I love the initiative, the attitude, and the overall spirit of the effort. It takes more than skill to be a programmer; you need to love it. For that he gets an A+.

But, as a software architect and a mentor, I must be fair and point out the very rare opportunities for improvement.

- Excessive white spaces – surely we don’t need spaces on both sides of the period. Perhaps he should consider a tab rather than consecutive leading spaces. I was going to suggest this to him, but he chose that moment to engage in massive crap. He was concentrating very hard on the pushing exercise, with his brow furrowed and his face turning red from the effort. Relative to his intestinal action and diaper trauma, the tab issue seemed trivial so was left unsaid.

- The period – in this context, it doesn’t actually do anything, which is OK. I see that the period is a solution; its just that we don’t understand the problem because we're dumb. I only mention it here because he didn’t comment it.

Cleary Jack is on the road to programming greatness.

Sunday, March 29, 2009

XQuery – How ye disappoint

I’m updating a legacy app from VB6 to ASP and then to .NET. The ASP is a transitional step so that I can stop dealing with COM+ objects.

Anyhoo… I picked one of the common pages. It prints a grid, basically.

- page calls a vbscript function

- the vbscript function executes a query and gets back a recordset

- a series of nested loops covert the recordset to xml

- the xml is returned to the page

- the page uses XSLT to convert the XML to HTML

Sweet.

Now that I’m converting it to .NET, though, I wanted to try exciting new possibilities. The application is on SQL 2000, but updating to 2005 is a reasonable expectation. (I won’t push my luck with 2008).

The Intent

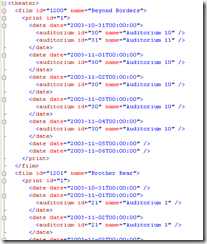

The idea is to transform this data

into this xml

In the current app, that transformation is done in ASP VbScript.

SQL XML

I’ve dabbled with some of the XML capabilities in 2005. I’ve used it to join to tables and to shred the xml. I’ve also used it to create xml documents without that pesky !TAG! syntax. But, they were all meager efforts.

I started by hoping that such meagerness would be sufficient.

1: declare @startDate datetime

2: declare @endDate datetime

3: declare @theaterId int

4: 5: select

6: @startDate = '10/31/2003',

7: @endDate = '11/6/2003',

8: @theaterId = 170 9: 10: select

11: v.FilmId "film/@film-id", 12: v.FilmName "film/@film-name",13: v.PrintId "film/print/@print-id",

14: dates.[Date] "film/print/date/@date",

15: a.AuditoriumName "film/print/date/auditorium/@auditorium-name",

16: a.AuditoriumId "film/print/date/auditorium/@auditorium-id"

17: from

But its not. That built the the hierarchy, but it repeats itself over and over. It doesn’t group itself the way I need. If there was/is a way to do everything I need by specifying the paths like that, then it would be a good day.

SQL XQUERY

Next, I started dabbling with XQUERY. My only XQUERY experience has been via SQL Server 2005, and in its simplest form.

I went Google-Crazy and read up on some stuff. I was able to write a query (albeit a crappy one) that does the job.

1: select @output.query('

2: <theater> 3: { 4: for $filmId in distinct-values(/theater/film/@film-id) 5: return 6: <film> 7: { attribute id { $filmId }} 8: { attribute name { /theater/film [@film-id = $filmId][1]/@film-name }} 9: { 10: for $printId in distinct-values(/theater/film [@film-id=$filmId]/@print-id) 11: order by $printId 12: return 13: <print> 14: { attribute id { $printId }} 15: { 16: for $date in distinct-values(/theater/film [@film-id=$filmId and @print-id=$printId]/@date) 17: order by $date 18: return 19: <date> 20: {attribute date { $date }} 21: { 22: for $auditoriumId in distinct-values(/theater/film [@film-id=$filmId and @print-id = $printId and @date=$date]/@auditorium-id) 23: return 24: <auditorium> 25: { attribute id { $auditoriumId }} 26: { attribute name { /theater/film [@film-id=$filmId and @print-id = $printId and @date=$date and @auditorium-id=$auditoriumId]/@auditorium-name }} 27: </auditorium> 28: } 29: </date> 30: } 31: </print> 32: } 33: </film> 34: } 35: </theater> 36: ')How does this suck? Let me count the ways

- 4 levels of nesting. Its not pretty. But, the VbScript has the same layers. (The logic is different, but its just as nested)

- Each layer has to go back to the top and work its way back down based on the key information collected thus far

- In the loops, I can only order by the loop indexer. For example: Auditorium. I don’t want to sort on “auditorium id”. I want to sort on display order. I can’t, because “auditorium id” is a value, not a node. If it was a node, I’d be able to get to a sibling attribute.

- It offers a handy distinct-values, but does not offer a handy distinct-nodes. (there are example how to do distinct-nodes, but the few I’ve seen use the LET statement, which you can’t do in SQL 2005)

What doesn’t suck

Obviously I’m having problems with it, but that may just be due to my staggering 45 minutes of inexperience with it.

- I like the syntax of specifying the attributes (shown) and elements (not shown) through the {} syntax

- I like that the comments are smiley faces (not shown). (: this is an xquery comment :)

- In principle, I like how you can do the layering.

The Problem

I got the XML that I want, but its slow. The SQL XQUERY consists of 2 parts: the query to get the data as xml, and the xquery to transform it the way I’d like it.

The first part comes back instantaneously. The 2nd part takes anywhere from 2 to 16 seconds. One time, it took a minute and 54 seconds?!. Its really inconsistent. I looked at the execution plan multiple times. Every time it says that the first query accounts for 0% of the time, and the 2nd query accounts for 100% of the time.

The legacy app does all it needs to do, including rendering it on the page, in a 1/2 second or less. You don’t even see it happen; you just click the link and the page renders.

I know that my xquery is amateur. If I can rewrite it the way it should be written and try again, maybe the results will be drastically improved. (At least I hope they are.)

Things that Would Help

- SQL 2008 supports the LET statement. If I had that in 2005, then I could assign node sets at the various levels, and treat that as the root for that level. Then it wouldn’t have to go to the top of the document every time. (At least, it seems like I’d be able to do that)

- If I could do a distinct-nodes instead distinct-values, then as I loop through, I can get the other stuff I need relative to the attribute. IE: $film-id/../@film-name.

- Knowledge of XQuery would sure be helpful.

Next Steps / Conclusions

I wanted the source doc to be hierarchical so that its would be an accurate representation of the data. Since the XQuery didn’t work, I may end up doing it in C#. Then, the page will use an XSLT to render it. (I’ll look into using XQUERY to render it, but I don’t think that’s a viable option yet).

I developed the original application starting in 2001. Over the first few years, I spent a lot of time performance testing the quickest way to get the data out of the database and onto a page. I always lean towards XML and XSLT so that you can easily render it different ways. I want to keep it transformable.

Of all the things I tried, the quickest thing has always been:

- Run the query and get back a flat dataset

- Use code to convert the dataset to xml

Despite the repeating data, and despite the manual conversion, it wins every time.

Things I may try

- Convert the SQL XML to my XML via XSLT

- Convert the SQL XML to my XML via C# code (the old fashioned way with a new language)

- Read more about XQuery to determine how off-target my query really is

Friday, March 27, 2009

Adventures in SCM

After a long hiatus, I am resuming work on JTS. JTS is a theater management system that <a href=’http://muvico.com’>Muvico</a> has been using since 2001ish.

2001. That was years ago. Those were the days of ASP and COM+. .NET was still called ASP+. I was still prefixing everything I did with the letter J.

The application now spans all generations of development technologies from ASP to .NET 3.5 SP1. Swell.

The first big effort is to downgrade all of the COM+ to ASP, because ASP is easier to work with these days. The second big effort will be to selectively convert the modules to the IN-DEVELOPMENT JTS 3 API.

I started researching what I can use for SCM. Ideally, I want it to be an online repository so that it doubles as backup service. (I use MOZY, but the client is dreadfully bad. Its almost unusable at this point. They said they’re rewriting it, but we’ll see.)

My googling lead me here http://www.myversioncontrol.com

For $5/month, I get what I need. Its subversion based, which I never used before. The service is excellent and its very generously priced. I have a security concern about it, though. I sent an email and we’ll see what happens. (it never asks for an encryption key. I’d like to keep their eyes out of my code.)

MyVersionControl recommends 2 SVN clients: RAPID SVD and TORTOISE SVN.

I couldn’t get RAPID to do anything. I got a lot of exceptions. I then tried TORTOISE which is a set of windows explorer shell extensions. That worked out pretty well. It took a little getting used to, though. My SCM exposure has been limited to VSS and StarTeam. This subversion stuff is different.

Finally, I tackled VS integration, which ended up being an easy task. I found this product: http://visualvsn.com. I installed it and started using it. Peace of cake. VisualVSN, like MyVersionControl, offers a 30 day trials.

Everything went well. I spent some time adding some filters to weed out the files that I don’t need to control. I checked everything in. My repository is now 50% full. Now I have 30 days to see if this sense of joy is permanent or fleeting.

Monday, March 2, 2009

Getting back on the ball with the DvdFriend, RSS feeds etc.

Today, I decided that I wanted to get my RSS feeds under control. I’m not very RSS saavy… I just use internet explorer to subscribe to them; nothing fancy. I ventured out to find a good web solution. I came across this little startup called “Google” which has, among other things, a decent reader.

I haven’t used any other readers, so when I say “decent”, its not relative to anything else. Its very functional, like Gmail. And, the user interface isn’t great, like Gmail. But, its pretty neat.

I have to rebuild a list of RSS feeds. If you come across this post, please let me know of your own personal feeds, and any others that you recommend. Please don’t assume that I already have it, even if I should.

Also, I downloaded and am currently using WINDOWS LIVE WRITER. Good stuff. This will greatly improve the readability for people who take offense to typos. (I’m thinking of someone particular. You know who you are.)

In a related story: I spent today getting a lot of stuff organized. I have multiple drives with duplicate code, documents, databases, etc. I’ve sorted through most of that. I also have some VMs:

1 – super secret side project that I dumped because it wasn’t respecting my time

2 – JTS

3 – Other development efforts, including DvdFriend

I’m getting all of those ducks in a line. The VM for #3 exists, but I haven’t setup the DvdFriend stuff yet. I’d like to get that going; I haven’t touched DvdFriend in months, and I’m itching to do some stuff. I started putting some prices and links in this week; Amazon and Netflix still work. Everyone else has changed their html, so the parser isn’t working. Oh well. 2 is better than none.

The last time I worked on DvdFriend, I made progress towards asking TV reviews at the SHOW, SEASON and EPISODE levels. Of course, that was months ago and I have no idea where I left off or how I did it. Lets hope that I can read my own code.

Saturday, February 7, 2009

There's nothing wrong with a test hitting the database

But, that's the extent of my opinion of it. Write tests that test the code you're about to write. I'm not into mock objects. I don't debate the validity or non-validity of any one approach vs any other approach. I do what I have to do to produce a test that proves the code works. I really try to keep it simple. If I need a dummy implementation of an interface to prove something, then I spend 6 seconds to write the implementation.

But, being in a company where there are lots of people with much stronger opinions about it, I hear a lot of stuff. When is a unit test no longer a unit test but a functional test? Should unit tests be allowed to hit the database? What should tests do and not do? Yadda yadda. I do not doubt the importance of those conversations or the ramifications of the results, its just not something I participate in. I'm more about the code and proving the code works; not the philosophy or implementation behind it.

One of thoe things that comes up quite a bit is "the tests should not hit the database". My response to most things is "well, it depends on the test". If you're writing tests that are implicity hitting the database, then sure, in that case the database component should be swapped out with something simpler and faster without the environmental requirements. Yippee.

But, sooner or later, you come down to the object that actually does the writes to and/or reads from the database. I'm sure you can emulate it, but if the object is a db object, then I'm of the opinion that you should make sure it reads and writes to/from the db. I don't know where that opinion stands in the overall view of the agile/tdd community, but I have heard blanket statements that tests should not hit the database.

Last night, I got a call after hours asking me to look at some tests that were failing. I immediately stated it was environmental since the tests were 2 years old and hadn't been touched in 6 months, and then I set out to prove it. The cause of the failure was a missing row of "system delivered data" from the database that was there prior to the related project, and should always be there.

If my test mocked the db activity rather than run it against the real scenarios, then we wouldn't have learned that the data was gone until someone fired up the product for real and tried to use it. The missing row was an adverse affect of a major database effort of another team. I wasn't involved with the fix, but as soon as it was identified they had no problem fixing it, so it seems to have been minor.

So is it a functional test or is it a unit test? I don't know, and it doesn't matter to me. I wrote a test to prove that the code works, and as soon as an environmental dependency vanished, the test failed. That's the imporant part.

Maybe there should've been a fitenesse test or some other automated test that would've tested the functionality within the website. Maybe that test does exist and we just didn't get to it yet. That's possible. But, it never got that far. They did the build and they ran the mbunit tests, and we immediately knew there was a problem.

There is one take away from this: As a developer, the exception message allowed me to quickly identify what the problem was. But, it was implicit; I mentally traced it to the actual cause. My take away is to proactively check for this condition and throw an explicit error message.

To those who say "your tests shouldn't hit the db", I say nay. Maybe I won't get the Agile Developer of the Year award, or maybe some in the TDD community will frown upon me, but the db hitting test identified a problem.

We (my team) have lots of tests that hit the db. This one is a bit different since its know system delivered data, but most of other tests are not. In those cases, they insert all the test data they need, run the tests, then clean all the test data. We achieve this, in part, by not using identity fields on our setup tables. All our test data gets inserted with ids > 10,000,000. We have a db test harness that's a facade for all of the things we need to do. The finally of every test calls a method that clears out all of the test data. All of these tests are flagged as "long running"; we run them locally, but not as part of the nightly build. In fact, we have a unit test that stress tests certain procs for thread safety.

That concludes tonight's rant.

Saturday, November 1, 2008

AZURE update

I've only seen my very simple project work twice. The first time was when I first built it; the 2nd time was some random success. Typically, it just times out after 3 minutes.

I'm setting this aside from now. This has inspired me on a project I've often talked about doing but never took on. So, I'm working on that now. I tend to start a lot of stuff and never finish it. This may be such a project, but at least for the moment, I'm motivated, and I'm working hard on it.

I'm going to use SQLCE as the default data store for the project. I haven't used it before, so it'll be neat. (Of couse, you can swap it out with any data store you want, but it'll be ready to run out of the box because of CE)

Friday, October 31, 2008

Resharper: "Use implicitly typed local variable declaration"

That ended up being an over simplification, though. Resharper has lots of great goodies in it. Though I would still like Code Rush, Reshaper is great all by itself. I learn to appreciate it more any day.

Except for the "Use implicitly typed local variable declaration" hint.

As an example, I have this line of code:

ServiceDescription description = attribute as ServiceDescription;

the type, ServiceDescription, is underlined with the fore-mentioned hint. Basically, its telling me to define the type as VAR and let the compiler figure it out for me.

I'm not a fan of that suggestion at all. If I know what type it is, then I want to specify the type. I don't need the compiler to figure it out for me. If I end up specifying a less than optimistic type (ie: should've used XmlReader instead of XmlTextReader), then Resharper or FxCop will let me know, and I'll learn from my mistake rather than just let the compiler do its voodoo for me.

However, I don't want to be irrational and just blindly shut off the hint. I wanted to find the justification for that hint, so I started poking around. It seems that there are 2 prevailing schools of though on this: Those that think you should use it for everything, and those that think you should only use it for anonymous types.

After reading a few different things, I have committed to my opinion expressed above: If you don't know what type it will be (because its anonymous), the use var. Otherwise, specify the type.

A guy from resharper justifies it here:

http://resharper.blogspot.com/2008/03/varification-using-implicitly-typed.html

While interesting, it doesn't sell me. Some comments on some bullets:

- Its is required to expres variables of anonymous type - no kidding. that's why it was invented.

- It induces better naming for local variables - that's putting a square peg in a circle hole. Its handholding at best. If you name you're variable CURRENT then its scope should be so small as to always remain obvious what it is. If it isn't obvious, then you named it wrong, and declaring it of type var isn't going to make you name it any better.

- It induces variable initialization. - Again, I don't need VAR to force me to do that.

- It removes code noise. - Maybe. I'd like to see some samples before I buy it.

- It doesn't require a using directive - so what? Are using directives troublesome to anyone? Heck, Resharper puts it in for you. If you don't have resharper, then CONTROL+. will put it in for you.

That's my story.

Thursday, October 30, 2008

Windows Azure - Cool

By recommendation of a co-worker who is at PDC, I immediately signed up for AZURE and download all of the associated files. I also had to update this machine to 3.5 SP1 and VS2008 SP1.

The following is just running commentary on what I'm doing as I do it.

Once everything was in, I opened up VS2008 and found the new CLOUD SERVICE project options. I can only guess what a worker is, so kept it simple and started with just a simple WEB CLOUD SERVICE.

This creates 2 projects: The service itself and a webrole. Again, I can only speculate on how we'll use WEB ROLE based on the name. The service project has a ROLES folder with a refernece to the ROLE project. Swell.

The role project looks like a website. It has a web.config, Default.aspx, Default.aspx.cs, and Default.aspx.designer.cs. (I think that last file is new too. If its been in 2008, then I never noticed it. Don't know if its an AZURE thing or a SP1 thing.) The service file has two configuration files: a csdef and a cscfg. One defines the service, the other configures it. The cscfg defines the endpoint name, port and protocol. It notes that the port must be 80 in the actual cloud environment, though you can use whatever you want in the dev environment.

I didn't make any changes since I really have no idea what I'm doing yet. I hit the RUN button to see what happened.

The status bar reports "Initializaing Local Development Service", or something like that. VS seems to hang for a while, then reports that it can't find .\SQLEXPRESS. That's fine. I don't have SQLEXPRESS. Then it hangs some more, and eventually says that the service timed out.

For kicks, I started downloading sqlexpress 2008. I haven't used 2008 yet, so now's as good a time as any. In the meantime, though, there must be a way to switch the database info.

I found the answer in C:\Program Files\Windows Azure SDK\v1.0\bin\DevelopmentStorage.exe.config. I changed the setting, then hit run in VS2008 again. It reports that DEVELOPMENT STORAGE IS ALREADY RUNNING. ONLY ONE INSTANCE OF THE APPLICATION CAN BE RUN AT THE SAME TIME. Then it hangs again, and eventually comes back the SERVICE TIME OUT ERROR.

I looked in Task Manager/Processes, and services.msc for any sign of this thing. No luck. That's not to say its not there, but I didn't see it short of looking at each process individually. I checked for things like AZURE and DEVELOPMENT, etc. (In retrospect, I should have looked through the vs2008 menus and icons. There's probably something there)

Oh well. Time to restart vs2008. This time, when clicking run, it asks me if its ok to do some initialization as an administrator. Fine with me; go for it.

It reports this:

Added reservation for 'http://127.0.0.1:10000/' for user account 'jayavst690\jaya'

Added reservation for 'http://127.0.0.1:10001/' for user account 'jayavst690\jaya'

Added reservation for 'http://127.0.0.1:10002/' for user account 'jayavst690\jaya'

Checking if database 'DevelopmentStorageDb' exists on server '.\personal'

Creating database DevelopmentStorageDb

Granting database access to user 'jayavst690\jaya'

The login already has an account under a different user name.

Changed database context to 'DevelopmentStorageDb'.

Adding database role for user jayavst690\jaya

User or role 'jaya' does not exist in this database.

Changed database context to 'DevelopmentStorageDb'.

Initialization successful. The development storage is now ready for use.

Now, there's a DEVELOPMENT STORAGE icon in my system tray. Was that there before? Didn't notice. This time, it gave me a baloon to let me know it was up and running. There wasn't one when it failed.

The development storage app shows that there are 3 services: Blob, Queue, Table. Table is stopped, the other 2 are running. The menu bar doesn't gives us a whole lot to do.

I closed it and ran it again. This time, vs2008 hung. It gave me the "vs2008 is waiting for an internal process" message. Swell. I see that in sql server management studio 2005 all the time (usually when working with diagrams), but this is the first time for vs2008.

I got tired of waiting, so killed it from the task manager. While in there, guess what I noticed: DevelopmentStorage.exe. That definitely wasn't there when I checked earlier.

I restarted vs2008, and ran the project again. Development Storage started, but vs2008 is hanging again.

So far: Lots of hanging.

Now I'm just trying to get it to do something. I read a blog entry that tells me WEB ROLE isn't what I thought it would be (I was guessing just based on the word role). My goal is to get a silly DvdFriend service going. I'll keep you posted.

Thursday, July 31, 2008

Related Products

This involved three new tables:

- ProductGroupType

- ProductGroup

- ProductGroupMembers

Two new views:

- vwProductGroups

- vwProductGroupMembers

Stored Procedure:

- GetRelatedProducts

I added a new object data source to the page, and a gridview. The ODS calls a method that calls the stored procedure and returns a datatable (keep it simple).

Currently, it just lists them with links. That will improve. Also, a product may be associated to multiple groups. The page will have to improve to show the different groups. For now, they're all just merged into one distinct list.

I'm not going to make an effort to backfill groups. But, as new movies come up, I'll create new groups as appropriate. So far, there are groups for Stargate, Lost Boys, Hellboy, and Batman.

Wednesday, July 30, 2008

MVC Preview 4

Monday, July 28, 2008

Added News and Feed

The News support was already in there. It was displayed on the page somewhere about a year ago, but it didn't look good or wasn't useful, so I got rid of it.

Fortunately, the method to retrieve it is still there. Basically, I just call GetRecentEntries(5, "news", true).

Last 5 entries; news zone; get the entire text rather than just the title.

There's a news feed too, but its going to need work. The title of a blog entry may contain HTML (ie: the link to dark city), but a syndication title may not. I have to change the entry page to distinguish between the title and what the title links to (if anything). In the meantime, it tears the html out of the title if there is any. Then, for the permalink, it extracts the link. If the link it exists, it uses it. Otherwise, it just links to the main page.

Sunday, July 27, 2008

Web delay fixed

It never really bothered me much because there are only a few visitors a day, but now that the embedding is enabled, its more intrusive. The hamletcode blog would pause as it waited for the dvdfriend site to fire up to serve the demo embedded review.

I poked around in the pool settings and found that the worker process was set to shut down after 5 minutes of being idle. I disabled that. I also saw that it was set to recycle ever 29 hours, so got rid of that. There's really no reason for the worker process to need to be recycled, but this will be a good test to make sure.

Since I was in there anyway, I changed the session timeout from 20 to 60 minutes. Effectively, the timeout was 5 minutes anyway since there's rarely more than one person writing a review at any given time.

This should resolve all the timing issues. Also, it will help coverup a bug with the review page; If session times out while you're writing the review, you lose it. That's definitely very bogus and I have no reason to avoid fixing it other than a complete lack of interest. In fact, that's the last thing that's stopping me from removing the "under construction" label.

In conclusion

Reviews can now be embedded

The code goodies follow. ReviewHtml.GetReview() simply builds a bunch of javacript document.write() methods.

[ServiceContract]

public interface IContentService

{

[OperationContract]

[WebGet(UriTemplate = "review/{reviewId}", BodyStyle = WebMessageBodyStyle.Bare, ResponseFormat=WebMessageFormat.Xml)]

Stream GetReview(string reviewId);

}

public class ContentService : IContentService

{

public Stream GetReview(string reviewId)

{

return new MemoryStream(Encoding.UTF8.GetBytes(ReviewHtml.GetReview(new Guid(reviewId))));

}

}

Here are some interesting things I learned:

- I had to return the text as a stream rather than as text. If you return it as just a string, then there's always sometype of serialization wrapper around it. Additionaly, the generated html tags get encoded. So, we get <td> instead

- In the UriTemplate, you specify the parameters.

UriTemplate = "review/{reviewId}"

Its maps the value in {reviewId} to the reviewId parameter of the method. That's cool; very MVCish. Unlike MVC, however, it must be a string. In this case, the ID is a guid, but the mthod must accept a string.

I find this dissapointing and, if I had to guess, I'd say that will change. The framework is certainly capable of converting known types for us.

I really just sort of tripped through the WCF stuff in this case. I have to read up on the new REST capabilities.

Wednesday, July 23, 2008

Good bye faithful printer.... Good bye!

Well, see... there. I've lied already. In the very first sentence I'm lying like a president. The printer isn't so much "passing" as it being put down.

I have a Lexmark X125 all in one printer. It is AT LEAST 5 years old; possibly even more than 6, but I have definite milestones to peg it at least 5.

Its a cool little printer that I got for cheap and has lasted, to some extent, all this time. It still works. In fact, shortly after I bought it, I bought one for my parents too. (Coincidentally, last night I received a call about a new printer they bought. I believe that they too were still using their X125 until recently, but unconfirmed.)

So why the mixed feelings? On one hand, I bought a cheap printer and used it for 5 years. On the other hand, it annoyed me every time.

Here's the thing: the drivers suck. They always have. Lexmark support was 0 help... I gave up years ago. The printer will work for a while, then you have to kill some processes in order to get it to print again. I exchanged many emails with them just trying to get them to come clean and say "sorry, we suck", but they wouldn't.

The next logical question may be, "How did you put up with that for 5 years, you poor poor soul!?". The answer is simple: I don't do a heck of a lot of printing. I bought a box of paper years ago and I still have most of the reems (spelling?). I just don't have a need or a desire to print. If I want to read something from the computer, I just read it on the computer. It doesn't have to be paper. I keep all our digital images as digital images... I don't print them.

Lately, I've had a need to print some stuff, for expense reports, on a monthly basis. My luck has been limited. I occasionaly go to the office with the intent of printing the stuff, but then it slips my mind and I leave empty handed. Ick. I also have to fax my receipts, an the X125 was being difficult. It would often say "replace cartridge" even though it was a new cartridge. After multiple attempts, I thought it was dead. Then, it finally worked.

So now, at long last, we're at the point where it is essentially unusable. I want to be one of the cool kids that simply clicks "print" and the thing prints. Is that too much to ask? Am I being a snob by not wanting to fight to print for 5 minutes a page? I don't think so, but I value your opinion, so let me know.

Due to the fact that I work from home, my esteemed employer found it in its heart (and wallet) to equip me with a brand new HP5610. I plugged it in and clicked print. Guess what... it printed! Now I feel like a king. Rejoice!

I'll cart the ole X125 up to the recycling center on my next trip. When I dump it in the box, perhaps I will pause for just a moment and reflect on the times gone by, both good and bad, but I make no promises.

Coming Soon - Embedded Reviews

Anyway, I started looking into this. The first thing that jumped to mind was left over from 1995: Add an iframe. The second thing to jump to mind was: don't be ridiculous.

I started searching on the current swell ways to do this. The goal, per normal, is keep it simple. I just want the user to drop a little piece of something on their page and have it work. I came across XSS pretty quick, then avoided it since XSS is often associated with bad mojo due to attacks. I looked at the object

tag... Couldn't get it to work with external web pages.

Eventually, I ended up back to XSS.

Created A Page called ReviewScript.aspx

I wiped out everything from the ASPX except for the server tags at the top.

The PageLoad calls Response.Clear(). It then builds a big piece of javascript that, basically, generates some html then writes it to the document.

Add a script tag to the page that references ReviewScript.aspx

Every time I paste any type of tag into this stupid thing, it loses it. And I'm currently too lazy to deal with a screen shot. So, mentally fill in angle brackets.

script language='javascript' type='text/javascript' src='http://www.dvdfriend.us/ReviewScript.aspx?id=xxx'

/script

Sweet. I started by including that on the dvdfriend main page (dev version) so that I can compare whats generated to what shows up on the page.

Templates / Make it look as it does on the main page

The generated html is based on a template. The default template is going to look exactly like a rewiew does on the main page. I started by embedding the script on the main page so that I could look at them next to each other. Once it was close, I moved it to another site altogether.

Create a new CSS

As soon as I imported the dvdfriend.css to the other site, it messed up the entire page. That was expected. I created a new css called external.css and copied over only the styles i needed. I renamed them all with a prefix of DF, just to keep them separated.

Incidentally, the css is included by document.writing a link tag.

That pretty much did it.

Template - So Far

The template has these tokens so far:

DvdFriendCss

Rating

Title

ProductTypeImage

ProductName

ProductId

CreateDate

Author - pending. Have to populate this

RatingClass

AuthorLink

ProductLink

ReadLink

The list will grow. Most of them are just pieces of data so that you can build it anyway you want. Some of them are more generic to give you something to start with. the LINK tokens, for example, automatically create the links as you see them on the home page now.

TODO

- See if there is a better way to include the CSS. If there are multiple embeds on the same page, it will import the css multiple times. Would rather do it through javascript.

- Retrieve the author. The page is built from a datatable. The script is built from a blog object which, mysteriously, doesn't already have an AUTHOR property exposed.

- Work out some additional css issues. It almost looks like it does on the site, but I still have some font issues to resolve.

- Test in production environment. I've only used it on my local machine. Lets see if it actually works out there. Furthermore, lets see what types of things, if any, prevent the xss from firing.

- LATER: Allow for users to create their own templates using the available tokens. I think that every template will be available to every user, but it can only be editted by the person who created it. We'll see.

- Brush my teeth and go to bed

- Server it as a WCF service rather than an aspx page. The aspx is a quick and dirty just to get it going. I will convert it to a WCF service much like the RSS feed. The one missing piece of info there is how to pass parameters. It shouldn't be a big deal; I just have to look into it.

Screenshot

Here's what it looks like embedded on the now neglected Clan Friend site.

The background of the review is always white. Its not inheriting it from the parent element.